Buying an expired domain: generating content at scale with GPT-3

Before going deep into this topic; make sure to read the first two parts of my series about building an MVP.

Part One: Buying an expired domain

Part Two: Buying an expired domain: learnings on ranking capabilities

TL;DR

- We know our target revenue to spark our “this is it, this is working” moment.

- We don’t know how to reach the target so we don’t know what we’re doing.

- We know we want to test and learn; and have fun.

Finding the content loop

We now have found a product that sells: a city map poster. You look for any city in the world, customise the poster as you wish and a few days later, it comes framed right to your door. Yet, a lot of our users don’t seem to understand our value proposition so instead of looking for their city, they keep asking us if this or that city is available.

That’s when I had the idea to create travel pages. So for any city someone is looking for, we store the internal search and we generate a dedicated city page. So users can navigate our travel pages directly from the internal navigation to eventually find their city, or by typing the query on Google search. Then comes into play the true reason behind these pages: Google Shopping. So instead of having only one product in our shopping feed, we were able to create as many pages as cities being searched for. The bonus was being able to test how fast these pages will be indexed by Google, considering that I planned to use GPT-3 to generate the content.

Remember, we are testing and learning. So we try to get the maximum impact with the minimum effort; just to see how it goes. Then, if it seems to work pretty well, then we invest more time to iterate and so on.

With travel pages, we found our content loop or programmatic SEO tactic. Content that we can produce at scale with minimum effort based on internal searches. We could name it searchdexing as well but I like the concept of content loop more. Our cities can be seen as a network that can scale quickly, easily and that can produce added value every time it grows.

Generating content with GPT-3

So far, we have the internal searches as first input for our pages. We need more to create pages useful enough that users can use. So here’s the idea:

- We can’t list all the cities being searched for on a single page due to obvious user experience reasons so we plan to create a /travel page to list all the continents with internal links leading to countries, and then to regions, etc.

- So we end up with pages with no content, just links leading to our city pages. We don’t care about these pages, they just help us by organising the content.

- We want to give users the opportunity to build a pre-built poster or to design their own. So we design our city pages with a preview of the default poster with a call to action to start the customisation. And we also add pre-built designs so they can directly add to cart.

- At the bottom of the page, we have a few lines about the city and, using the coordinates, internal links leading to the three closest cities (sometimes it works, sometimes the city is the same just because the coordinates are slightly different). The content is fully automated using GPT-3.

We used the OpenAI API to generate the content with the Da Vinci model. We also scraped the first paragraph of Wikipedia for all our cities to use it as an input for our content model.

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.Completion.create(

engine="text-davinci-002",

prompt="Boston, officially the City of Boston, is the capital and most populous city of the Commonwealth of Massachusetts in the United States and 24th-most populous city in the country. The city proper covers about 48.4 sq mi with a population of 675,647 in 2020, also making it the most populous city in New England.\n",

temperature=0.7,

max_tokens=256,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)Getting GPT-3 pages indexed by Google

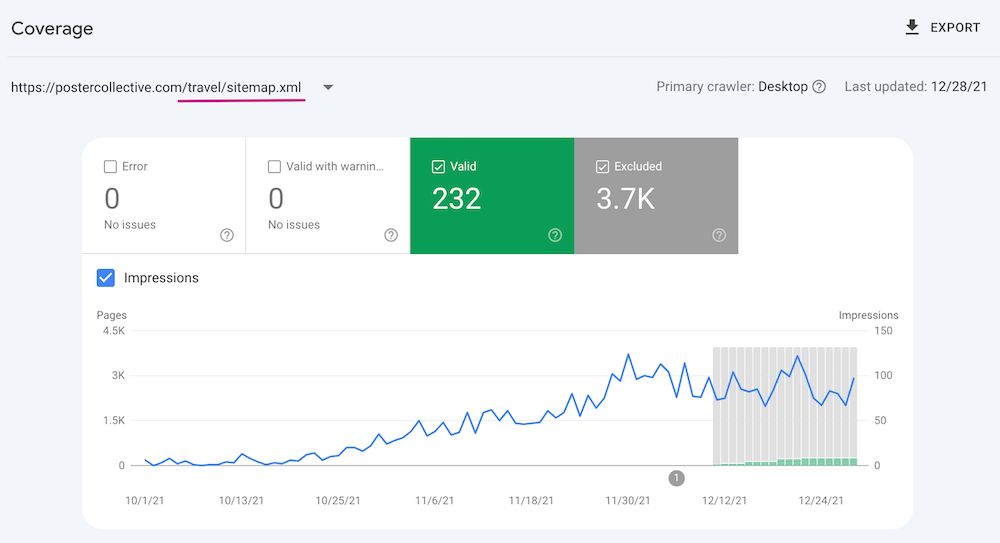

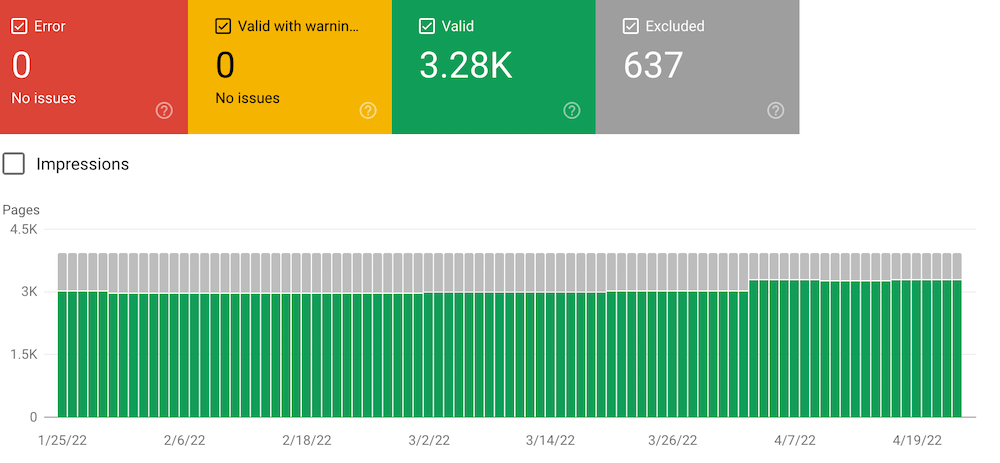

Our Travel pages went live on December 9th, 2021. We created a dedicated sitemap containing our +3K pages.

At the beginning, we had a couple hundred pages indexed by Google. A few dozens more on a daily basis after December 9th.

I don’t have the data between end of December and end of January because I was working on another topic and didn’t think that I would write an article on this (Google, if you read this: please consider adding a date range as in the Performance report). So clearly, our pages have been (almost) fully indexed during this timeframe. It took less than 47 days for our pages to get indexed by Google.

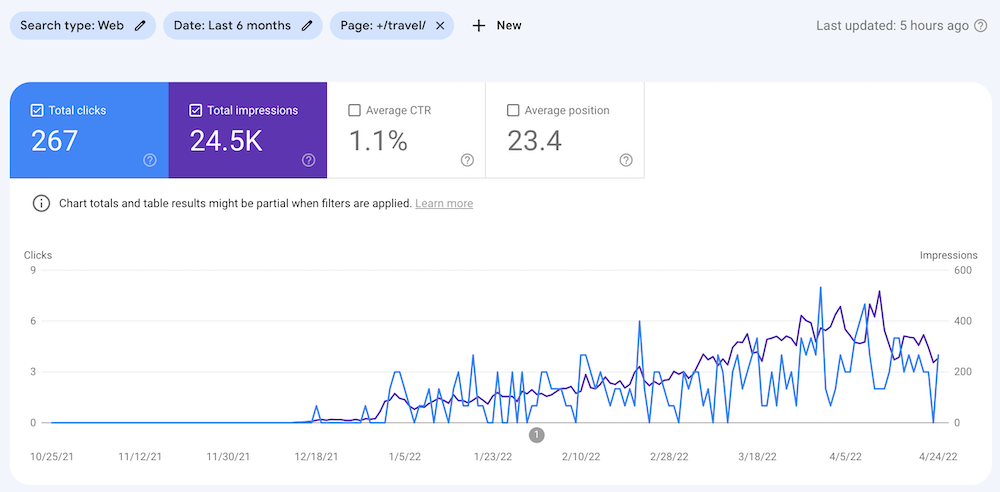

What to expect in terms of organic traffic?

Well, see by yourself. Not so bad for a test that went live on December 9th, 2021 and not updated since! 🙂 We generated about $500 of revenue from these pages thanks to organic traffic. For now, we focus on other topics but we know that we have room for improvements to scale the traffic.