Week 42. What OpenAI and Tally teach us about feedback, persistence and product intuition.

Hey!

Welcome to this Week 42 edition!

How OpenAI uses ChatGPT

How funny to see how the OpenAI team uses its own product. They recently published a series on how they build with AI, and I particularly liked the one about unlocking insights faster.

The tool doesn’t replace data scientists. It frees them to do different work. [...] Ops teams now generate launch reports in minutes instead of days, freeing capacity to spend more time with customers. Product teams can learn in real time from customers, informing their roadmaps with faster feedback loops. This transformation has shifted how we can listen. [...] Feedback that once sat buried in the backlog is now central to how we build.

This is one of my favorite use cases for AI: helping teams focus on what actually matters for customers, while automating the painful, buried tasks no one really wants to do.

How Tally grew to $4M ARR

Someone (Oncrawl CEO, François Goube actually) once told me:

A successful entrepreneur is someone who keeps banging their head against the wall until it finally works because they’re convinced it will.

That feels exactly like Tally’s story.

When I read about new startup success stories, I often think back to Uber. They built something no one was explicitly asking for, but once it existed, no one could live without it.

Reading Marie Martens’ latest blog post reminded me of that same pattern:

Forms have existed since the early days of the internet. The space is crowded, every business needs a form, and yet… almost nobody actually likes using a form builder. Most of them are bloated with features, locked behind paywalls, and NPS scores to prove it. Because let’s be honest, nobody loves forms. But what if we could build a product people actually wanted to use? Even if we could convince just 1% of this saturated market, we believed we’d already be onto something. And that’s how it all started.

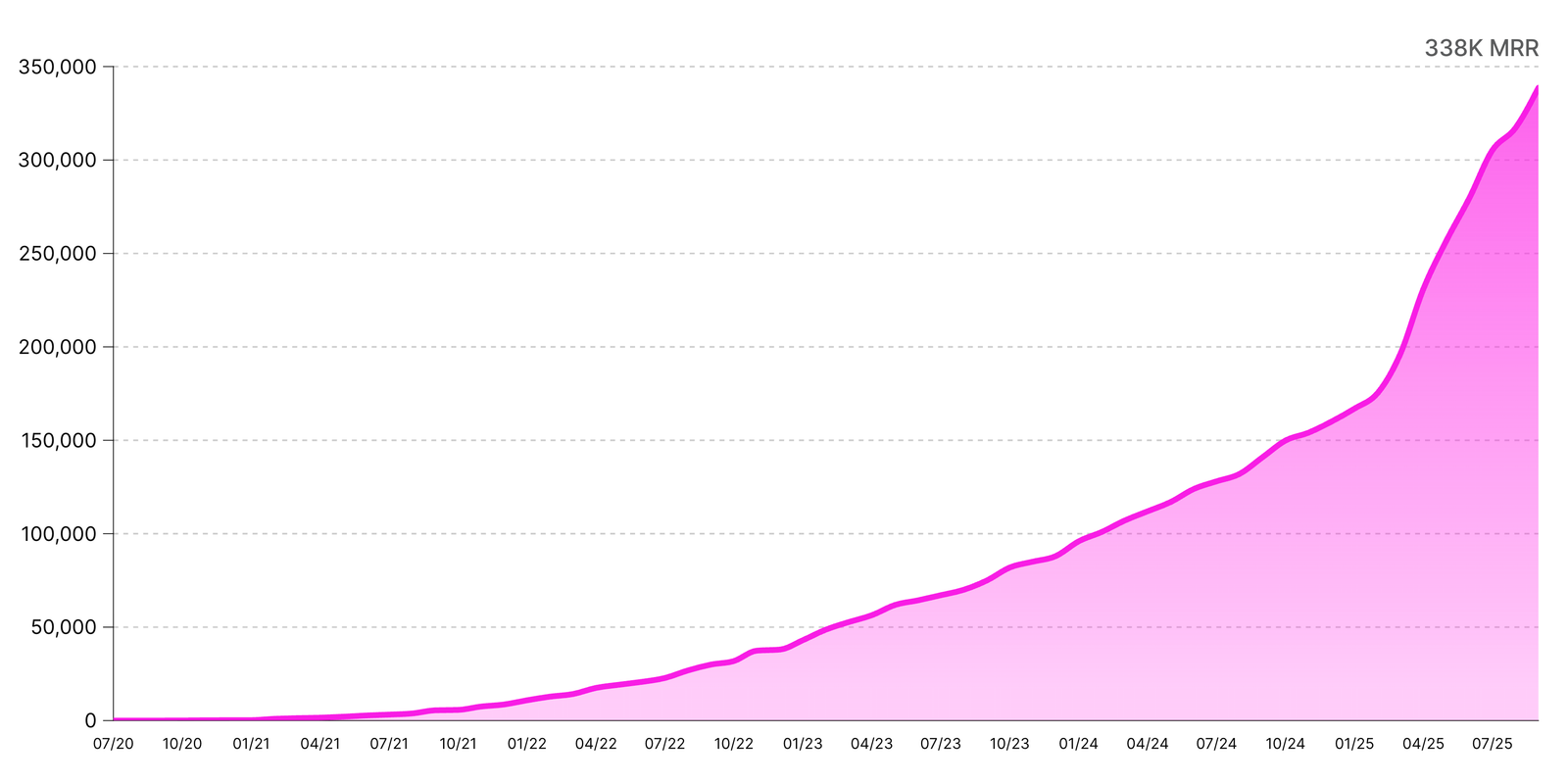

How we grew Tally to $4M ARR, fully bootstrapped

🍿 Snacks

I don’t have any snacks to share this week, but I do have a question for you!

Feel free to drop your thoughts in the comments.

I’m currently hiring for several positions, and I allow the use of AI for our test assessments. When a candidate told me he had used AI, I thanked him for being transparent. I do ask candidates to explain why they use AI, but in the end, it’s mostly based on trust.

When he shared his output, I could feel it was written by AI: the dashes, the emojis, some phrasing too. That’s when I realized he had missed the point.

I’m not against using AI at all. But for me, you first need the curiosity to understand the data yourself. In this test, I expect candidates to play a bit in Google Sheets, maybe plot a random KPI like ad spend over time, just to see (literally, see) the seasonality for instance. He completely missed that, because he 100% outsourced the thinking part.

Maybe with a sharper prompt, he could have reached the same insights asking, for example, “highlight the seasonality if there is any,” or “point out anomalies.”

But he didn’t. His recommendations were okay but he didn't have the context.

So my question is: when AI can handle the analysis, what skills do you still expect people to show?

See you next week,

Alice

Comments ()